The Evolution: MLOps to LLMOps

MLOps (Machine Learning Operations) brought the discipline of DevOps to data science. It solved the "throw it over the wall" problem where data scientists built models that engineers couldn't run.

In 2026, with the dominance of Generative AI, we have evolved to LLMOps. The fundamental principles remain: automation, reproducibility, monitoring, but the artefacts have changed.

- MLOps Artefacts: .pkl/.onnx files, tabular data, accuracy metrics.

- LLMOps Artefacts: Prompt templates, Vector Indexes, LoRA adapters, semantic scores.

The goal remains the same: Reliability at Speed.

Core Pillars of AI Operations

1. Reproducibility

Can you recreate a model from six months ago? This requires versioning not just code, but Data (DVC), Environment (Docker), and Configuration (Hydra/Pydantic). In LLMOps, this also means versioning the System Prompt and the RAG retrieval logic.

2. Automation

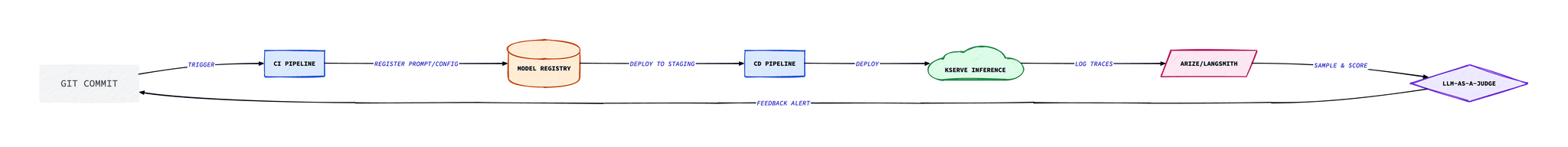

Manual deployments are forbidden. Training, evaluation, and deployment should be triggered by Git commits or data arrival. The "human in the loop" approves the deployment, but the machine executes it.

3. Observability

Monitoring CPU/RAM is not enough. You need to monitor Prediction Drift (output distribution changes),Data Drift (input distribution changes), and for LLMs, Hallucination Rate and Toxic Language.

The Model Registry Pattern

The Model Registry is the heart of MLOps. It acts as the bridge between training and deployment.

Never deploy a file path. Deploy a registered model version.

# Example: Registering a model with MLflow

import mlflow

with mlflow.start_run() as run:

# Train your model...

model = train_model(data)

# Log metrics

mlflow.log_metric("accuracy", 0.95)

# Register model

mlflow.sklearn.log_model(

model,

"model",

registered_model_name="fraud_detection_prod"

)The registry manages stages: Staging, Production, Archived. Your CI/CD pipeline promotes models between stages based on automated test results.

CI/CD/CT: Continuous Training

DevOps has CI/CD. MLOps adds CT (Continuous Training).

- CI (Continuous Integration): Test code, test data schemas, lint prompts.

- CD (Continuous Deployment): Deploy model service, run canary tests, shift traffic.

- CT (Continuous Training): Detect drift, trigger retraining, evaluate new model, register if better.

For LLMs, CT often means "Continuous Fine-tuning" or "Continuous RAG Updates". Updating your vector database with nightly document syncs is a form of CT for knowledge.

Monitoring & Observability

In 2026, we don't just log "success/fail". We log the full trace.

LLM Tracing

Using OpenTelemetry extended for GenAI (OTEL-GenAI), we trace the lifecycle of a request:

- Retrieval: How long did the vector search take? What chunks were returned?

- Prompt Construction: What did the final assembled prompt look like?

- Generation: What was the TTFT (Time To First Token)? What was the total token count?

- Guardrails: Did the output trigger safety filters?

Feedback Loops

Every user interaction (thumbs up, edit, copy) is a training signal. Operationalise this data pipeline to feed directly back into your evaluation datasets.

Infrastructure & Serving

Serving infrastructure has matured. Kubernetes is the standard OS for AI.

KServe & Ray Serve

KServe: Kubernetes-native standard. Good for simple models, integrates with Istio for canary rollouts.

Ray Serve: Python-native, flexible. Best for complex pipelines (e.g., chaining multiple models, custom logic). Dominant in LLM serving.

GPU Sharing (MIG/MPS)

GPUs are expensive. Use MIG (Multi-Instance GPU) on A100s/H100s to slice one big GPU into 7 smaller instances for smaller models or dev environments.

The 2026 MLOps Toolchain

The "Modern AI Stack" has stabilised. Here are the category leaders:

| Category | Tools |

|---|---|

| Model Registry | MLflow, Weights & Biases |

| Orchestration | Kubeflow Pipelines, Airflow, Prefect |

| Feature Store / Vector DB | Feast, Pinecone, Weaviate |

| Serving | KServe, Ray Serve, vLLM, Triton |

| Monitoring | Arize, HoneyHive, LangSmith, Grafana |

Production Readiness Checklist

- ✅ Reproducibility: Can I rebuild the model from git hash + data version?

- ✅ Testing: Do I have a golden dataset and automated evaluation pipeline?

- ✅ Monitoring: Are alarms set for latency, errors, and output quality?

- ✅ Fallback: Is there a mechanism to fallback to a previous model or rule-based system?

- ✅ Cost Control: Are there budget caps and rate limits in place?

Conclusion

MLOps and LLMOps are about discipline. They are the difference between an AI demo that wows stakeholders for 5 minutes and an AI product that drives business value for 5 years. Invest in your platform, your pipelines, and your observability. The models will change every month, but your operational excellence will compound.